Xilinx Developer Forum: Claimed to be the highest performance convolutional neural network (CNN) on an fpga, Omnitek’s CNN is available now. The deep learning processing unit (DPU) is future-proofed, explained CEO Roger Fawcett, due to the programmability of the fpga. It was demonstrated as a GoogLeNet Inception-v1 CNN, using eight-bit integer resolution. It achieved 16.8 terra operations per second (TOPS) ...

FPGA / PLD

The latest Electronics Weekly product news on FPGA (field-programmable gate array) and PLD (programmable logic device) devices to be (re)configured by a user after manufacturing.

Accelerator cards exploit fpga technology to boost server performance

Two accelerator cards, the Alveo U200 and Alveo U250, announced by Xilinx, improve latency rates

Xilinx embraces heterogeneous computing with first ACAPs

The first products in the adaptive compute acceleration platforms (ACAPs) are unveiled

ASIC asserts rights to ML chip market

According to a report published by Allied Market Research, application specific integrated circuits (ASICs) will dominate the machine learning (ML) chip market. The Machine Learning Chip Market report forecasts the global ML chip market will be worth $37.8 billion in 2025, demonstrating a compound annual growth rate (CAGR) of 40.8% between 2018 and 2025. The report considers chips by type ...

Partners bridge HLS and FPGA technology

Designers can used the integrated development environment (IDE) to quickly go from C++ to FPGA using the HLS and Achronix’s ACE design tools. The combination can reduce the development effort for 5G wireless and other design applications that require high performance FPGA technology in SoCs, configured using a proven C‑based design flow. Ellie Burns, director of marketing, Calypto Systems division, Mentor said: “Achronix eFPGA offers a tremendous ...

DAC explores the role of AI and ML across the markets

The 55th Design Automation Conference (DAC) will cover many topics for chip and system designers

Neural network accelerators for Lattice FPGAs

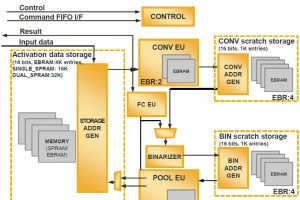

Lattice has introduced two hardware artificial intelligence algorithm accelerators for its FPGAs, one for binarised neural networks (BNNs) and one for convolutional neural networks (CNNs). Both are aimed at implement neural networks in consumer and industrial network-edge products. They are not suitable for network training, which must be done elsewhere. ‘Binarized neural network (BNN) accelerator’ supports 1bit weights, has 1bit activation ...

Xilinx reports record revenues and searches for UK engineers

Xilinx has posted record revenues of $2.54 billion for the fiscal year 2018, an increase of 8% from last year. Revenues were $673 million for Q4 of FY 2018, up 7% from the prior quarter and up 10% from Q4 FY 2017. CEO Victor Peng attributes the growth to :a three-pronged approach”, focusing on data centres, with the introduction of ...

EW: Video Interview – Lattice advancing Edge Connectivity and Edge Computing

At Embedded World 2018, we caught up with Deepak Boppana of Lattice Semiconductor.

Fast SoC module is supported with build environment

Based on the Xilinx Zynq UltraScale+ MPSoC, the Mercury+XUI is Enclustra’s fastest SoC module. It will be presented at Embedded World 2018 (Hall 3-210). The module has up to 747,000 systems logic cells across six Arm processors, a GPU and up to 294 user I/Os. Built-in interfaces include two Gigabit Ethernet, USB 3.0 and USB 2.0, 16 master guide tables ...

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News