The horseshoe crab (limulus polyphemus) is recognised by scientists as an interesting model for vision research because the animal’s body is large enough to study and its retina neurons are quite easily accessible. The crab has several compound eyes distributed over the whole body, including a photoreceptor located on its tail (main image).

The horseshoe crab (limulus polyphemus) is recognised by scientists as an interesting model for vision research because the animal’s body is large enough to study and its retina neurons are quite easily accessible. The crab has several compound eyes distributed over the whole body, including a photoreceptor located on its tail (main image).

The crab eye can be considered as a matrix of photo-receptors with an integrated neural network located close by. This network (processing unit) reduces the redundancy of information in the data stream by selectively prioritising features within the field of view. Crabs need to detect patterns critical to survival, such as edges or movement, while enjoying the landscape is irrelevant given the limited capabilities of its brain.

This horseshoe crab is sometimes called a living fossil because of its robustness against environmental variations. We can mimic this quality using technology by visualising the integration of a microcontroller with sensors as an equivalent of the crab’s sensory structure to build a reliable distributed edge AI system.

Edge AI approach

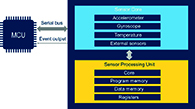

The classic architecture of an edge AI sensor system consists of a microcontroller (MCU) acquiring and processing data from the connected sensor(s). Effectively, this means allocating the MCU’s computation time and resources to execute a machine- learning (ML) model. This approach may not be efficient for certain use cases, especially if real-time execution is a major requirement or if there is a need to reduce current consumption.

Figure 2: Block diagram of ISPU

In a standard model the MCU reads the raw sensor data and executes an ML model to process sensor data. In a dissipated AI approach, the sensor processing unit executes the ML model of sensor data and the MCU reads the processed data.

It is possible to integrate the processing stage in the sensor, distributing AI tasks to the edge. This way, the ML model is executed in the sensor and only the inferencing results are transmitted to the MCU. This has significant consequences in that the MCU is offloaded of heavy computational tasks and needs only to wait for events/external interrupts, and so can enter a low-power mode to reduce current consumption or to allocate its resources to other tasks. The datastream created by an AI-enabled sensor is strongly reduced (events only) and serial bus traffic is reduced as well, decreasing noise emission. In terms of the overall system reliability, having this kind of parallel processing, when the code executed in the MCU and sensor can be simplified, helps reduce the probability of faults.

Sensor with processing unit

A new device category called intelligent sensor processing unit (ISPU) combines an inertial measurement unit (accelerometer + gyroscope) with an embedded sensor processing unit in a single package.

Figure 3: The ISPU RISC core structure

Processing inside the sensor is via a low power programmable Risc core. It features selectable clock frequency (5MHz or 10MHz), 32kB of program memory and 8kB of data memory. It is possible to process internal data from the accelerometer and the gyroscope, as well as six bytes of external data from sensors connected to the ISPU through an auxiliary I2C bus.

Tools

ISPU application development is supported by the GCC compiler toolchain, although taking this approach requires detailed knowledge of the sensor architecture.

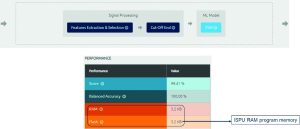

Figure 4: ML model structure and performance

An example is the NanoEdgeAI Studio, is an automated ML design tool by STMicroelectronics, which comes as a standalone GUI application that embedded developers can use without deep knowledge of ML, says the company. It is possible to generate a binary file of the logger for certain hardware configurations. While creating a selected model, the application finds an optimised pre-processing chain of given data, selects the best AI model, applies hyper-parameters and performs learning and testing of the model. A batch of ML models is proposed as a result of the benchmark phase. The developer selects the best performing model identified by the tool or test alternatives.

It supports the Anomaly Detection, dynamic model, the N-Class Classifier, static model, the 1-Class Classifier, static model and the Extrapolation, static model ML use cases.

The tool is dedicated to input multiple time series (vectors) from sensors such as MEMS, voltage, current, temperature or time-of-flight, with predictive maintenance, human activity recognition, medical care and motor control application domains.

The basic data structure for the tool is a signal with a defined temporal length. The expected size and sampling frequency of the signal should be constant throughout the project. A set of independent signals (no need to be adjacent in time) creates the data set for a given class. Following the basic rule – one class, one file – the input format is CSV (text file) and inputs can be provided both from a file or a CSV-formatted datastream (Virtual Com Port).

Generated ML models can be static or dynamic. A static model is pre-trained, while a dynamic model can learn on a target MCU, providing an option to adapt to an environment since knowledge is incremental (static structure in RAM, backup/restore is possible). The models are based on well-known ML algorithms. The NEAI Studio outputs a library and companion header files implementing a given ML model and simplified API. There are three functions: Init(), Learn(), Detect() when the model is dynamic and two functions with a static model: Init(), Detect(). ANSI-C is used. The library footprint size can go down to several kB of RAM and flash memory, while still being capable of learning on a target during runtime, says the company. A Cortex-M MCU architecture and ISPU-type sensors are supported.

Lab example

An example project was created to demonstrate the NEAI Studio capability to create binary-size efficient ML models. The goal of the project was to detect anomalies in a portable fan where the nominal condition was free running at a given speed and the abnormal condition was air-stream clogged. The specification details were the following:

* Use case: Anomaly Detection (Binary Classifier), model capable to learn on target,

* MCU: STM32G476 (Cortex-M4, low-power MCU family),

* Sensor: ISM330ISN, FS = ±2g, attached to the top of the fan,

* Sensor output data rate, ODR = 416Hz ,1/ODR = 2.4ms, Signal = 128samples ≈ 0.3s.

It was important to cover by the temporal length of the signal the full expected range of the variety of phenomena under control, for example, the vibration frequency of the fan motor, as well as the mechanical resonance factor.

The input data set consisted of 30 signals representing a free-running fan vibration pattern and 30 signals covering abnormal behaviour of the air stream clogged by a piece of paper. The input data set was collected using a dedicated logger providing a CSV-formatted output stream: printf() output redirected to UART/VCP. A data set provided served as context data for model creation and testing, while the learning stage was executed on a target or emulator as one of the tool features. The first action during runtime was the learning phase, when only nominal signals were provided and the minimum number of 20 signals for learning iterations to meet a given model performance was suggested by the tool. The learning stage could be triggered at any time during runtime, adding to the knowledge.

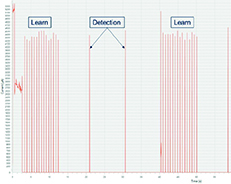

Figure 5: MCU current consumption chart

A Z-Score Model was generated receiving the data from an automatically created preprocessing pipeline. The resources used by the model library were very low: 3.2kB both for RAM and the program memory of the inertial measurement unit.

The sensor’s capability to autonomously detect anomalies in the fan enabled the MCU to stay in a low-power mode most of the runtime, which is visible in the chart showing current consumption over time.

As the sensor code is stored in volatile memory, the first part of the curve (up to 2s) shows upload of the programme (via I2C bus) and the component initialisation. After that, the MCU enters a low power state and, following an external interrupt from the sensor, wakes up to process an event (end of learning iteration or anomaly detection) and then enters the low-power mode again.

This demonstrates that it is possible to implement machine learning using limited hardware resources and distributed edge AI architecture. Using an automated machine-learning design tool, designers can shorten the development time without the need of having an experienced data scientist or strong data science background in general. Combining a microcontroller with an AI-enabled sensor also helps to reduce the overall power consumption and enhance system reliability.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News