There are two versions:

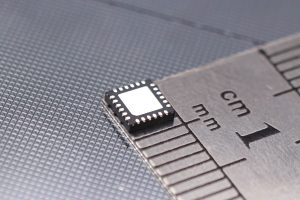

- NPN32 with 32 int8 MACs

- NPN64 with 64 int8 MACs

“Both of which benefit from Ceva-NetSqueeze for direct processing of compressed model weights,” according to the company. “NPN32 is optimised for most TinyML workloads targeting voice, audio, object detection and anomaly detection. The NPN64 provides 2x performance acceleration using weight sparsity, greater memory bandwidth, more MACs and support for 4bit weights for more complex on-device AI use such as object classification, face detection, speech recognition and health monitoring.”

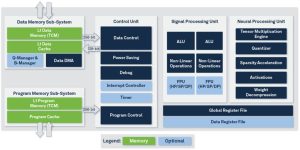

The single cores include sufficient hardware, said Ceva, for neural network compute, feature extraction, running control code and running DSP code without an external microcontroller.

NeuPro Studio is the associated AI stack, which works with AI inference frameworks including TFLM and µTVM.

A pre-trained model zoo has been created with TinyML models for voice, vision and sensing use.

Electronics Weekly Electronics Design & Components Tech News

Electronics Weekly Electronics Design & Components Tech News